I’ve been talking to the ABR for a bit recently about OLA scoring. I’ve made no secret about my opinions on the actual content of the ABR’s 52-question-per-year MOC qbank and the likelihood of its ability to ensure anything meaningful about a radiologist’s ability to practice.

But I’ve been especially interested in how OLA is scored. For quite a while there was a promise that there would be a performance dashboard “soon” that would help candidates figure out where they stood prior to the big 200-question official “performance evaluation.”

And now it’s here. And, to their credit, the ABR released this blog post—How OLA Passing Standards and Cumulative Scores are Established—that actually really clarifies the process.

How Question Rating Works

In short, the passing threshold is entirely based on the ratings from diplomate test-takers, who can voluntarily rate a question by answering this: “Would a minimally competent radiologist with training in this area know the answer to this question?”

I think that wording is actually a little confusing. But, regardless, the fraction of people who answer yes determines the Angoff rating for that question. The passing threshold is above that percentage and failure is below, averaged out over all the questions you’ve taken. Because different people get different subsets of a large question database, currently “the range of passing standards is 50% to 83%.”

That’s a big range.

One interesting bit is that a question isn’t scorable until it’s been answered by at least 50 people and rated by 10, which means that performance can presumably change for some time even when not actively answering questions (i.e. if some already answered questions are not yet scorable when first taken). Back in the summer of this year, approximately 36% of participants had rated at least one question. It may take some time for rarer specialities like peds to have enough scorable questions to get a preliminary report.

The Performance Report

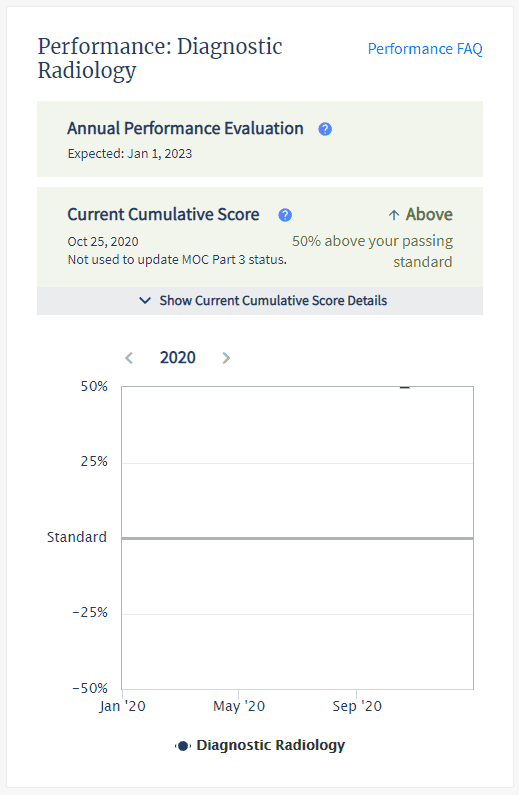

The current performance dashboard is a little confusing. Here’s mine:

It includes neither the actual passing threshold nor your actual performance. It’s got a cute little graph (with currently only one timepoint), but it reports performance simply as a percentage above or below the passing standard. I wasn’t quite sure what that meant mathematically, but the ABR actually addressed that in the post:

If the participant is meeting or exceeding that passing standard, their current cumulative score will indicate what percentage above the passing standard has been achieved. For example, if the average rating of the questions is 70 percent, the participant is required to answer at least 70 percent of the questions correctly within the set to meet the passing standard. If a participant’s current cumulative score indicates that they are 21 percent above the passing standard, then the participant would have successfully answered 85 percent of their questions correctly.

Based on that paragraph, their math here for passing individuals:

your percentage correct = passing threshold*percentage above + passing threshold = passing threshold*(1+percentage above)

In their example, that’s 85 = 70*.21 + 70 = 70*1.21

I’d rather just see the underlying numbers because the ABR doesn’t make it easy to see your cumulative performance at a glance. It’d be nice to see the actual passing threshold and your actual percent. But with this information, you can now—with some effort—estimate these numbers.

First, get your own raw performance. This isn’t straightforward. You’ll need to go to “My OLA History.” Filter to count your incorrect questions by clicking on “Your Answer” and selecting “incorrect.” Manually count that hopefully small number of questions and use that versus the total number you’ve answered (if you’ve been doing more than 52 per year, it’s going to be tedious to count manually).

So, for example, I’ve answered 104 questions and gotten 3 incorrect, which nets me a performance of 97%. My percentage above passing is reported as 50%, though it’s worth noting that the scale maxes out at plus/minus 50, which mathematically can’t be correct if the minimum passing threshold can be as low as 50%, so who knows. Anyway:

97 = X*1.5

X = 97/1.5 = 64.667

So we can estimate that I needed to get ~65% correct to meet the passing threshold given my question exposure, which is right in the middle of the current reported range.

Caveats include the fact that you don’t know how many if any of your questions are currently non-scoreable nor is it easy to see how many if any questions were subsequently nulled due to being deemed non-valid, so this really is just an estimate.

In related news, it’s lame there isn’t a way to see exactly how many questions you’ve answered and your raw performance at a glance. I assume they are trying to simplify the display to account for the varying difficulty of the questions, but it feels like obscuration.

Self-governance

The ABR has stated that the OLA question ratings from the community are all that’s used for scoring. While volunteers, trustees, etc are all enrolled in OLA and presumably rating questions, the ABR says what the greater community says is what goes.

So we are to judge what a radiologist should know based on what about 1/3 of MOC radiologists think a radiologist should know. There is no objective standard or aspirational goals. Radiologists can cherry-pick their highest-performing subspecialties and maintain their certification by answering a handful of questions in their favored subjects that are essentially vetted by their peers, who may not exactly be unbiased in the scoring process.

I don’t actually necessarily see a problem with that.

What I do see is more evidence of a bizarre double standard between what we expect from experienced board-certified radiologists and what we expect from trainees.

In court, the ABR has argued that initial certification and maintenance of certification are two aspects of the same thing and not two different products. As someone who has done both recently, they don’t feel the same.

Unlike OLA, neither residents nor the same broad radiology community has any say in what questions meet the minimal competence threshold for the Core and Certifying exams. For those exams, small committees decide what a radiologist must know.

You earn that initial certification and suddenly all of that mission-critical physics isn’t important. Crucial non-interpretive skills are presumed to last forever. And don’t forget that everyone should know how to interpret super niche exams like cardiac MRI (well, at least for two days).

It either matters or it doesn’t.

Why does physics, as tested by the ABR (aka not in real life), matter so much that it can single-handedly cause a Core exam failure for someone still in the cocoon of a training program but then suddenly not matter a lick when the buck stops with you?

The fact is that most practicing radiologists would not pass the Core Exam without spending a significant amount of time re-memorizing physics and nuclear medicine microdetails, and the new paradigm of longitudinal assessment doesn’t allow for once-per-decade cramming necessary to pass a broad recertification exam.

Meanwhile, diplomates are granted multiple question skips, which while designed to give MOC participants the ability to pass on questions that don’t mesh with their clinical practice can nonetheless be used to bypass questions that you don’t know the answer to. Especially for the performance-conscious and those with borderline scores, this creates a perverse incentive to miss out on rare learning opportunities.

But my point here is not that OLA should be harder.

My point is that certification should mean the same thing with the same standard no matter how long you’ve held it.

4 Comments

Ben,

You have missed three questions!

Maybe you should seriously consider a review course, it may help you perform better.

Good luck

I’m ashamed to admit I missed another one this year out of the 38 I’ve done so far.